This Proof-of-Concept leverages IOWN APN, which significantly reduces communication latency, to enable distributed deployment of GPUs (AI computing devices) and large-scale storage that had traditionally required physical adjacency. The initiative aims to build and socially implement “distributed data centers.”

Between November and December 2025, an actual IOWN APN line will be deployed between Fukuoka (GPU) and Tokyo (storage) to conduct a practicality assessment toward commercial implementation.

【Background】

In recent years, the widespread adoption of large language models (LLMs), a key category of generative AI, has led to a rapid surge in demand for AI development infrastructure. Whether in on-premises or cloud environments, it is essential to prepare not only GPUs themselves but also large-scale storage for storing computation results and submitting jobs.

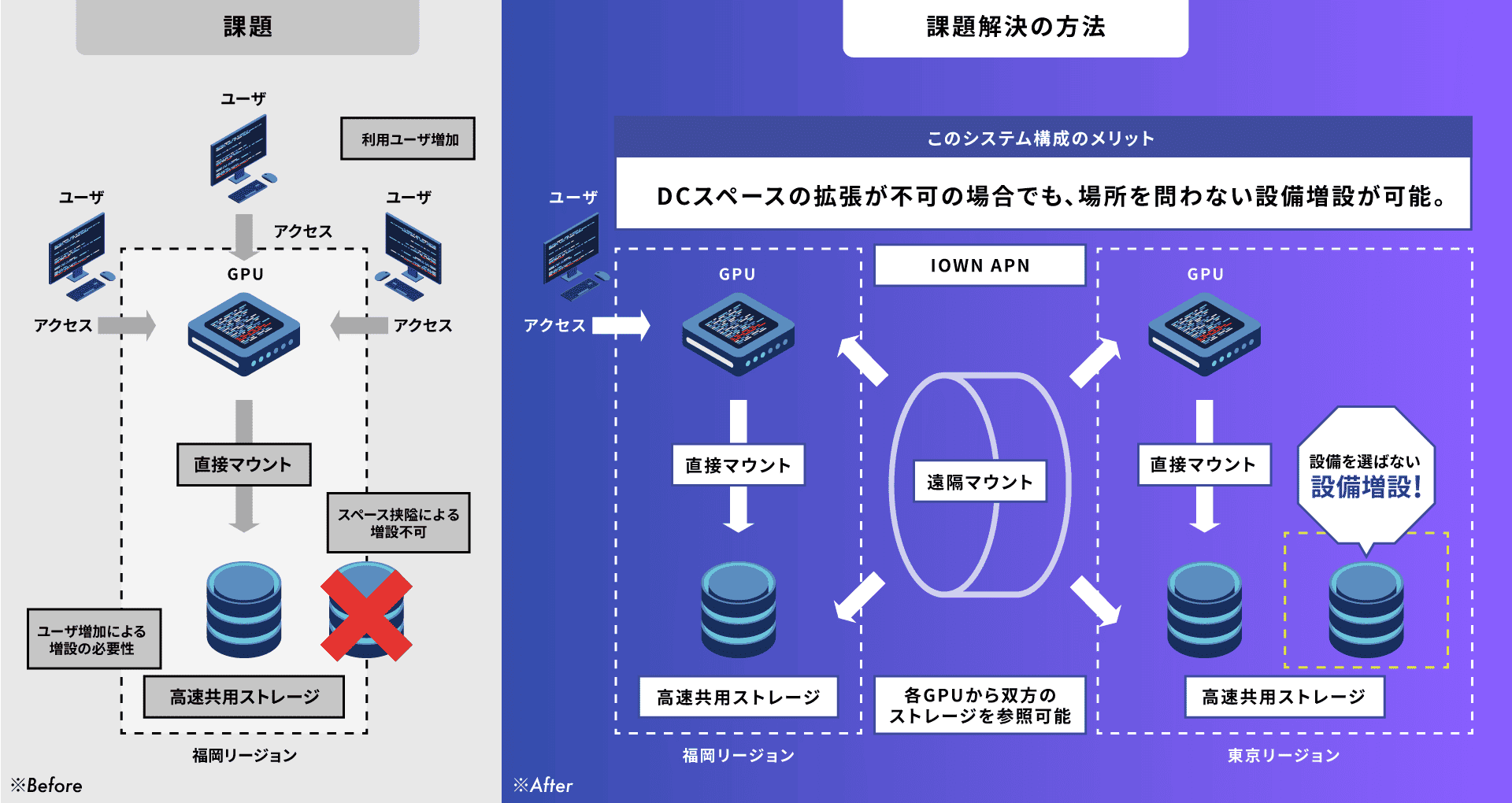

There is growing demand to flexibly expand computing capacity beyond the physical installation space limitations of data centers, as well as needs to store training data and computation results within one’s own facilities. However, due to current issues with communication latency, GPUs and storage are required to be placed adjacently. This results in challenges such as limitations on adding new devices and the inability to address geographical constraints when organizations wish to store storage systems at specific sites, such as their own facilities. These concerns highlight future issues in building flexible AI development infrastructure.

To address these challenges, it is believed that introducing a mechanism capable of high-speed, large-capacity, and low-latency connectivity can provide a solution. Against this backdrop, the four companies have partnered to launch this Proof-of-Concept.

▼Examples of Challenges in Building AI Development Infrastructure

【Overview and Results of Preliminary Verification】

As part of the preliminary verification, a performance test of GMO GPU Cloud was conducted in July 2025 within a simulated remote environment assuming the distance between Fukuoka (GPU) and Tokyo (storage), approximately 1,000 km. The test confirmed that two trial tasks could be stably completed.

In the concrete use case of building “distributed data centers,” which are expected to benefit from low latency, the experimental results and subsequent discussion and analysis obtained from this simulated remote verification became important findings that enhance the feasibility of social implementation.

Overview of the Preliminary Verification

• Installed the latency adjustment device “OTN Anywhere” in the Fukuoka data center

• Using GMO GPU Cloud, executed two trial tasks—image recognition and language learning—under various latency conditions (e.g., 15 ms assuming Fukuoka–Tokyo) and measured/evaluated changes in task completion times when using remote storage

Results of the Preliminary Verification

• In both tasks, benchmark score changes were confirmed as latency conditions increased

• Under the Fukuoka–Tokyo equivalent latency condition (15 ms) set in this verification, the performance decline was approximately 12%

The details and results of the GPU–storage connection performance test in a simulated remote environment using “IOWN APN”

URL https://internet.gmo/news/article/87/

【Overview of This Proof-of-Concept】

In this Proof-of-Concept, we will leverage the high-capacity, low-latency features of the next-generation communication platform “IOWN APN” to technically verify, between November and December 2025, the feasibility of inter-site configurations using “GPUs” at a Fukuoka data center and “storage” at a Tokyo data center. The planned implementation will use actual IOWN APN lines, enabling practicality assessments in an environment closer to commercial deployment.

• Connect the Fukuoka data center and the Tokyo data center via IOWN APN

• Using GMO GPU Cloud, execute two trial tasks—image recognition and language learning—and measure changes in task completion times

• Compare task completion times in three conditions: conventional on-site adjacency, conventional Ethernet leased-line connection, and IOWN APN connection, to evaluate practicality for commercial implementation

【Roles of Each Company】

Roles of Each Company

| GMO Internet, Inc. | Provision of GPUs and storage for GMO GPU Cloud Application implementation and execution of benchmark tests |

| NTT East Corporation | IOWN APN technology verification and provision of the proof-of-concept environment |

| NTT West Corporation | IOWN APN technology verification and provision of the proof-of-concept environment |

| QTnet, Inc. | Provision of the proof-of-concept environment within the Fukuoka data center |

【Future Developments】

The success of this technical proof-of-concept marks a major turning point in the future of AI infrastructure and represents an important step toward realizing the new information and communications platform envisioned by IOWN—one that surpasses the limits of current ICT technologies. By leveraging IOWN APN (NTT East and NTT West’s “All-Photonics Connect powered by IOWN”), high-capacity, low-latency communications become possible, enabling new value creation that goes beyond conventional cloud services. This will allow GMO GPU Cloud to deliver flexible, innovative solutions that meet diverse AI utilization needs.

Going forward, we will advance full-scale field verification using actual IOWN APN lines connecting real sites, in order to evaluate the performance of GMO GPU Cloud and explore the potential for commercial deployment. Building on the results of this proof-of-concept, our future goal is to realize a world-class, sustainable AI infrastructure. By connecting various sites and facilities nationwide through IOWN APN and organically linking them via this AI infrastructure, we aim to create a distributed information society where AI resources can be accessed from anywhere. This will enable optimal allocation of AI resources on a national scale, the realization of disaster-resilient distributed infrastructure, and the co-creation of new foundational infrastructure for society.