AI Summary

AI Summary

NVIDIA’s Multi-Instance GPU (MIG) feature is now available on “GMO GPU Cloud.”

Users can split a single GPU into up to 7 instances, allowing for more efficient and cost-effective use of resources.

This feature is especially useful for running different workloads in parallel and optimizing resource usage — and is available at no additional cost.

【Background of NVIDIA MIG Integration】

GMO GPU Cloud, equipped with NVIDIA H200 GPUs, ranks 37th globally and 6th in Japan on the TOP500 list of supercomputer performance. Furthermore, it has been proven to deliver top-tier performance, ranked No.1 among commercial cloud services in Japan (*1).

At the same time, workload requirements have become increasingly diverse. jobs do not always require high computational resources depending on their scale or characteristics, while others demand high throughput through parallel execution of multiple jobs.

To address these evolving needs, GMO Internet has integrated NVIDIA MIG technology to provide customers with a more flexible and efficient use of computing resources, making the most of job schedulers (*2).

(*1) GMO Internet Group, Inc. press release dated November 19, 2024:

“GMO GPU Cloud by GMO Internet Group ranked 37th in the world in the TOP500 supercomputer ranking”

https://www.gmo.jp/news/article/9266/

(*2) A job scheduler is a tool that automates the execution of tasks on a computer system. It can run jobs based on predefined times or conditions to improve system management efficiency.

【About MIG Functionality in GMO GPU Cloud】

MIG (Multi-Instance GPU) allows a single NVIDIA GPU to be split into up to seven instances. Since each server in GMO GPU Cloud is equipped with eight NVIDIA GPUs, up to 56 instances can be created on a single server. Each split instance is allocated its own high-bandwidth memory, cache, and compute cores, and operates in a completely isolated environment.

Customers can flexibly customize the instance configuration according to the scale and nature of their workloads. For example, larger jobs can be assigned one or more full GPUs, while smaller jobs can be assigned individual MIG instances. This enables optimal resource utilization and improves cost efficiency.

MIG technology is available at no additional cost to customers using the “Dedicated Plan” of GMO GPU Cloud.

【About GMO GPU Cloud】(https://gpucloud.gmo/)

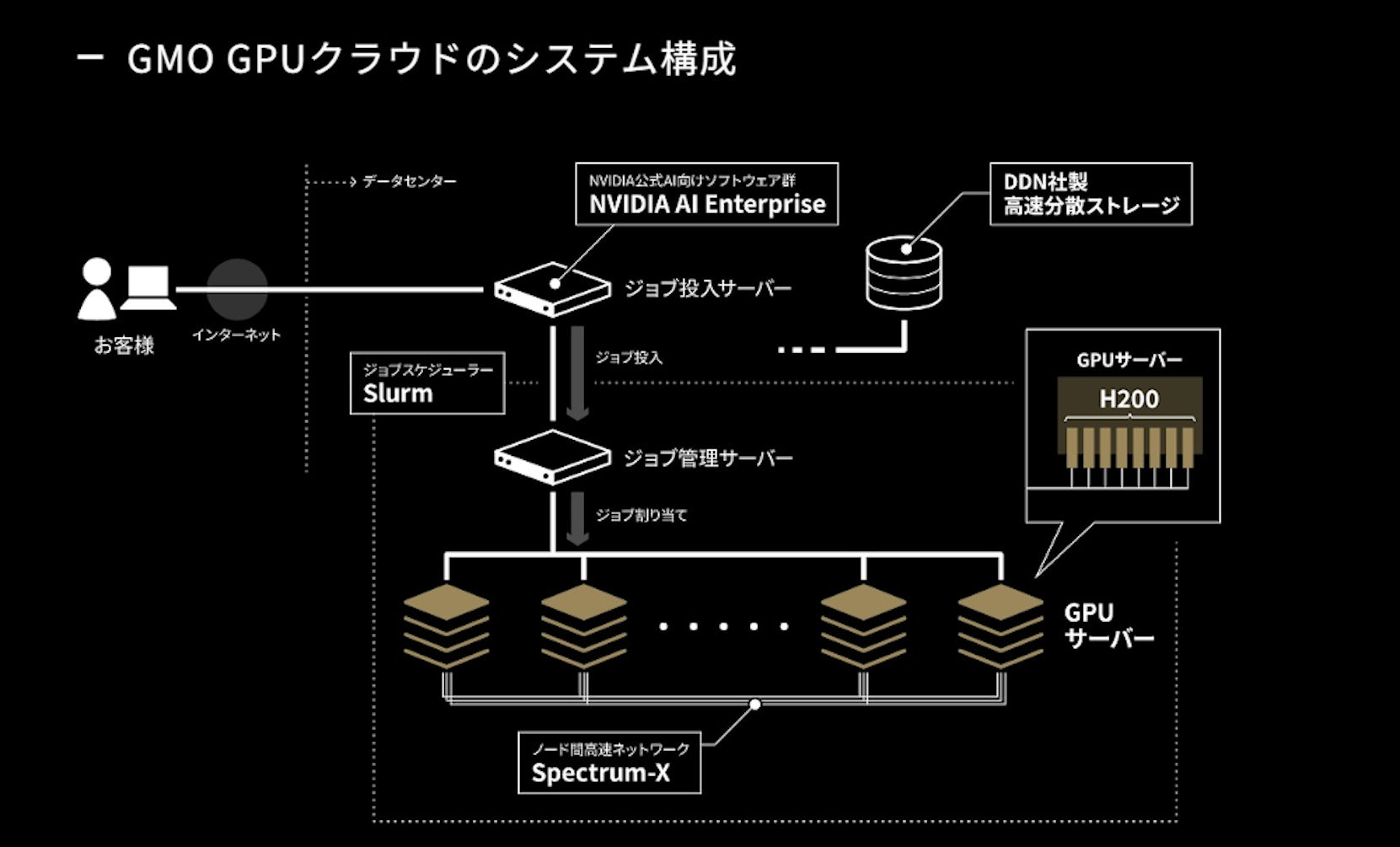

Launched on Friday, November 22, 2024, GMO GPU Cloud is one of the fastest GPU cloud services in Japan, powered by NVIDIA technology. It leverages the high-performance NVIDIA H200 GPUs to significantly shorten development and research cycles while reducing costs.

Furthermore, GMO GPU Cloud is the first cloud service provider in Japan to adopt both the NVIDIA H200 GPU and NVIDIA Spectrum-X, designed for AI networking. The combination delivers a high-performance GPU cloud environment optimized for generative AI and machine learning.

Through this service, GMO Internet provides enterprises and research institutions working in the fields of generative AI and high-performance computing (HPC) with a high-performance compute environment that requires no infrastructure tuning, contributing to faster development cycles, cost reductions, and the growth of Japan’s AI industry.

■ Features of GMO GPU Cloud

1.Equipped with NVIDIA H200 GPUs

The NVIDIA H200 GPU is optimized with significantly expanded memory capacity and memory bus bandwidth for the development and research of large language models. It offers about 1.7 times the memory capacity and approximately 1.4 times the memory bandwidth of the NVIDIA H100 GPU.

2.First in Japan to adopt NVIDIA Spectrum-X

GMO GPU Cloud is the first cloud provider in Japan to implement NVIDIA Spectrum-X, which dramatically enhances performance and scalability of Ethernet networking for AI workloads.

3.Cloud network acceleration with NVIDIA BlueField-3 DPUs

The NVIDIA BlueField-3 Data Processing Unit (DPU) accelerates GPU access to data, streamlines AI application delivery, and enhances the security of cloud infrastructure.

4.Ultra-high-speed storage by DDN

GMO GPU Cloud uses DDN’s high-speed storage, optimized for performance with the NVIDIA platform. It delivers a powerful, all-in-one AI development platform.

5.Rapid environment setup and management with NVIDIA AI Enterprise

NVIDIA AI Enterprise is an end-to-end, cloud-native software platform that accelerates data science pipelines and streamlines the development and deployment of production-grade copilots and other generative AI applications.

6.Industry-standard job scheduler Slurm

GMO GPU Cloud adopts Slurm, the industry-standard job scheduler for cluster systems, offering resource allocation, job control, and monitoring functions.

■ Pricing (Excluding Tax)

(*3) Charges apply only for usage exceeding 50% of the contracted monthly time.

■ Use Scenarios

• High-speed training and fine-tuning of large language models

• Training computer vision models using large-scale datasets

• Scientific computing for drug discovery, weather forecasting, and more

• Research and development requiring high-performance computing (HPC)